Transformation

Prepare and enrich datasets

Mar 20, 2024

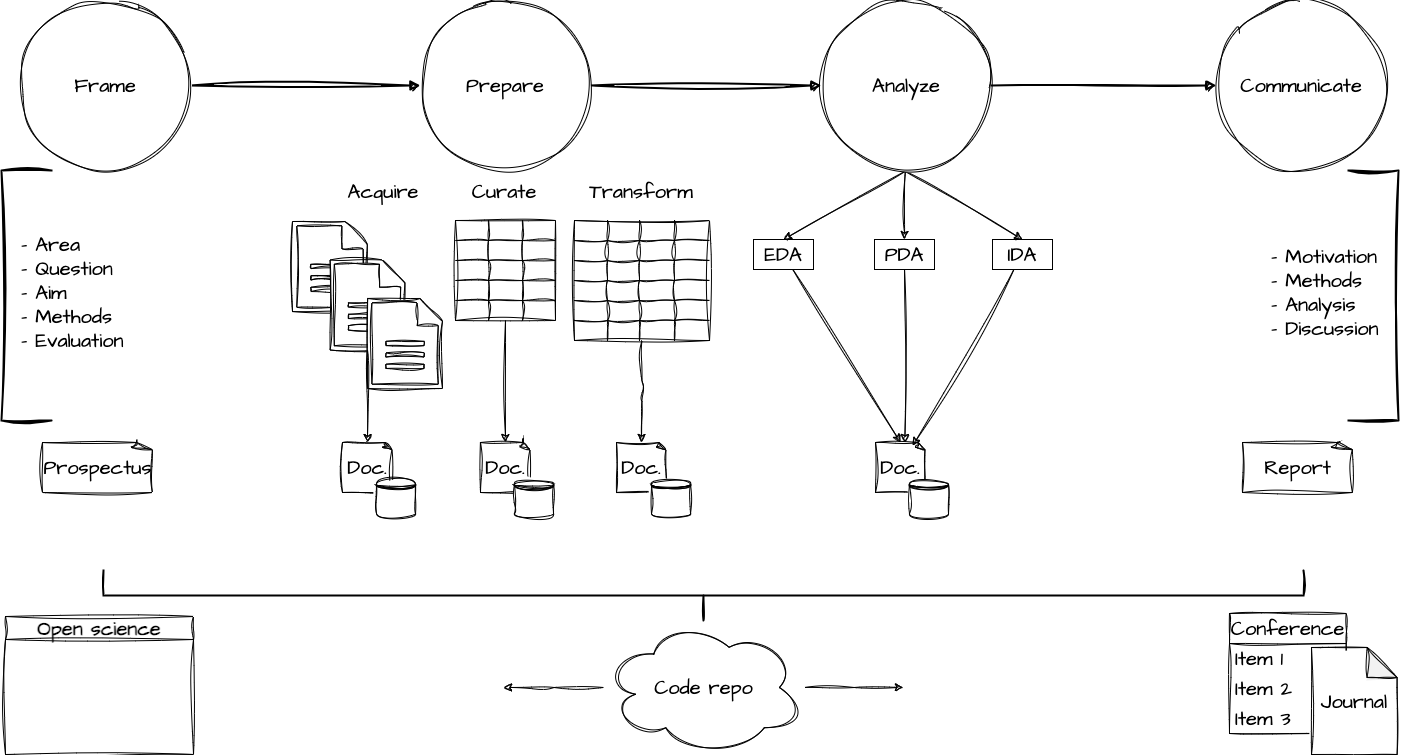

Overview

Preparation

- Normalization

- Tokenization

Enrichment

- Recoding

- Generation

- Integration

Process

Preparation

Normalization

Sanitize and standardize: Removing artififacts, coding anomalies, and other inconsistencies.

| Description | Examples |

|---|---|

| Non-speech annotations | (Abucheos), (A4-0247/98), (The sitting was opened at 09:00) |

| Inconsistent whitespace | 5 % ,, , Palacio' s |

| Non-sentence punctuation | - |

| Abbreviations | Mr., Sr., Mme., Mr, Sr, Mme, Mister, Señor, Madam |

| Text case | The, the, White, white |

Normalizing: example

Tokenization

Change linguistic unit: larger, smaller, or groupings.

It was the esscence of life itself.

| Description | Examples |

|---|---|

| Unigrams | It, was, the, essence, of, life, itself |

| Bigrams | It was, was the, the essence, essence of, of life, life itself |

| Trigrams | It was the, was the essence, the essence of, essence of life, of life itself |

Tokenization

Change linguistic unit: larger, smaller, or groupings.

It was the esscence of life itself.

| Description | Examples |

|---|---|

| Unigrams | I, t, w, a, s, t, h, e, e, s, s, e, n, c, e, o, f, l, i, f, e, i, t, s, e, l, f |

| Bigrams | It, tw, as, th, e_, es, se, en, nc, ce, of, f_, li, if, fe, ei, it, ts, se, el, lf |

| Trigrams | It_, was, the, ess, enc, eof, lif, e_i, tse, lfi, tse, lf |

Note: It is also possible to reconstruct the larger tokens from the smaller ones (i.e words from characters, sentences from words).

Tokenization: case

Consider the following paragraph:

“As the sun dipped below the horizon, the sky was set ablaze with shades of orange-red, illuminating the landscape. It’s a sight Mr. Johnson, a long-time observer, never tired of. On the lakeside, he’d watch with friends, enjoying the ever-changing hues—especially those around 6:30 p.m.—and reflecting on nature’s grand display. Even in the half-light, the water’s glimmer, coupled with the echo of distant laughter, created a timeless scene. The so-called ‘magic hour’ was indeed magical, yet fleeting, like a well-crafted poem; it was the essence of life itself.”

What text conventions would pose issues for word tokenization based on a whitespace critieron?

Tokenization: example

Enrichment

Generation

Derive attributes: from implicit information in the dataset.

- Lemmatization

- Part-of-speech tagging

- Morphological analysis

- Named entity recognition

- Sentiment analysis

- Dependency parsing

- …

Generation: example

- Part-of-speech tagging, lemmatization, and morphological analysis

Recoding

Recast values: to make explicit more accessible.

a different grouping, scale, or measure

Type: Numeric > ordinal > categorical

Scale:

- Logarithmic transformation

- Standardization

Measures: Results from a calculation

Recoding: example

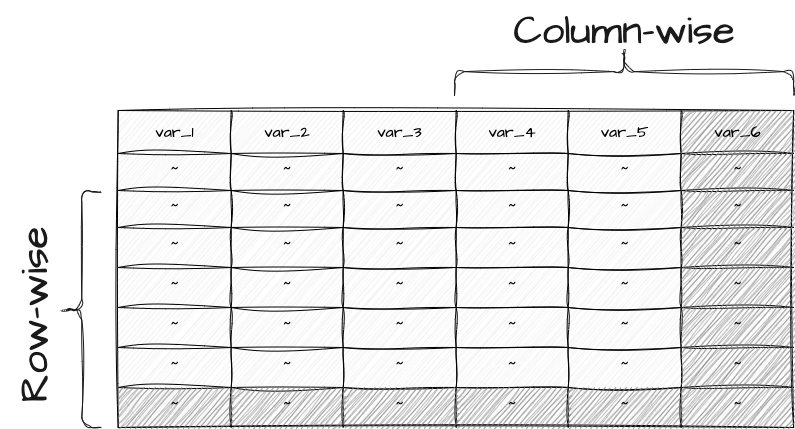

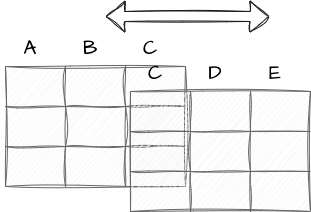

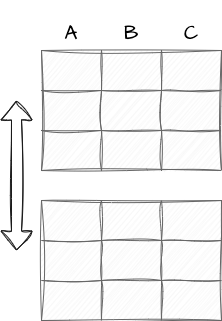

Integration

Juxapose datasets: to create a new dataset.

- Join: to add columns or rows based on a common key.

- Concatenate: to add rows to a common set of columns.

Joining: example

- Sentiment lexicon

Concatenating: example

- Two populations

Final thoughts

- Transformation is a critical step in the data analysis process.

- It builds on the curated dataset to create one or more datasets that are more in-line with the analysis goals.

- It is a process that is iterative.

- Diagnostics and validation are important to apply as you go along.

References

Transformation | Quantitative Text Analysis | Wake Forest University