text_id sentence_id word_id word

1 1 1 1 The

2 1 1 2 quick

3 1 1 3 brown

4 1 1 4 fox

5 1 1 5 jumps

6 1 1 6 over

7 1 1 7 the

8 1 1 8 lazy

9 1 1 9 dog

10 1 1 10 .Data

Understanding data and information

Jan 31, 2024

Overview

Up for today:

- Understanding data

- From data to information

- Documenting the process

Looking ahead:

- Recipe and lab 02

Quick reminders

Keeping up with this work is in your best interest.

Don’t forget the lessons! They are key to making sure you will be ready for upcoming programming portions of labs!

Understanding data

The raw material of data science

Populations and samples

Population

An idealized set of objects or events that share a common characteristic or belong to a specific category.

Sample

A finite set of objects or events from drawn from a defined population.

Sampling

Sampling frame Defining the population of interest.

Representativeness

The degree to which a sample reflects the characteristics of the population from which it is drawn.

- All samples are biased to some extent.

- Some samples are more biased than others.

Minimize bias

- Size

- Randomization

- Stratification

- Balance

Corpora

| Type | Sampling scope | Example |

|---|---|---|

| Reference | General characteristics of a language population | ANC1 |

| Specialized | Specific populations, e.g. spoken language, academic writing, etc. | SBCSAE2 |

| Parallel | Directly comparable texts in different languages (i.e. translations) | Europarl3 |

| Comparable | Indirectly comparable texts in different languages or language varieties (i.e. similar sampling frames) | Brown and LOB4 |

Corpus formats

XML

<text id = "1">

<sentence id="1">

<word id="1">The</word>

<word id="2">quick</word>

<word id="3">brown</word>

<word id="4">fox</word>

<word id="5">jumps</word>

<word id="6">over</word>

<word id="7">the</word>

<word id="8">lazy</word>

<word id="9">dog</word>

<word id="10">.</word>

</sentence>

</text>R data frame

From data to information

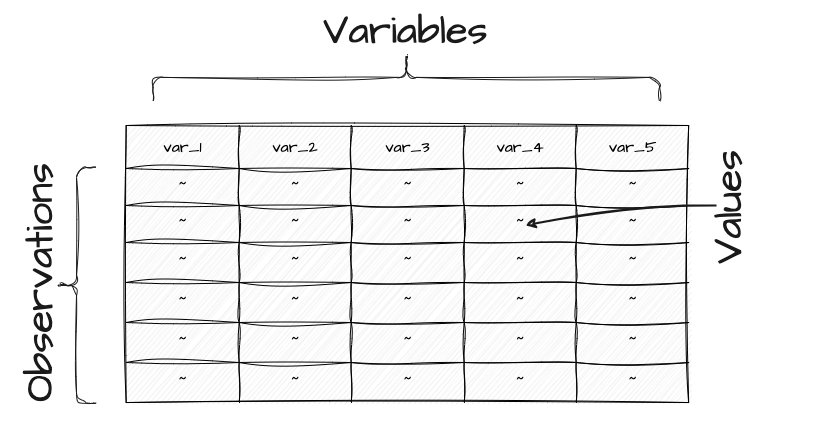

Tidy data

Physical structure

Tidy data

Semantic structure

title date modality domain ref_num word lemma pos

<chr> <dbl> <fct> <chr> <int> <chr> <chr> <chr>

1 Hotel California 2008 Writing General Fiction 1 Sound sound NNP

2 Hotel California 2008 Writing General Fiction 2 is be VBZ

3 Hotel California 2008 Writing General Fiction 3 a a DT

4 Hotel California 2008 Writing General Fiction 4 vibration vibration NN

5 Hotel California 2008 Writing General Fiction 5 . . .

6 Hotel California 2008 Writing General Fiction 6 Sound sound NNP

7 Hotel California 2008 Writing General Fiction 7 travels travel VBZ

8 Hotel California 2008 Writing General Fiction 8 as as IN

9 Hotel California 2008 Writing General Fiction 9 a a DT

10 Hotel California 2008 Writing General Fiction 10 mechanical mechanical JJ- Levels of measurement

- Unit of observation

Levels of measurement

essay_id part_id sex group tokens types ttr prop_l2

E1 L01 female T2 79 46 0.582 0.987

E2 L02 female T1 18 18 1 0.667

E7 L07 male T3 98 60 0.612 1

E3 L02 female T3 101 53 0.525 1

E4 L05 female T1 20 17 0.85 0.9

E8 L07 male T4 134 84 0.627 0.978

E5 L05 female T3 158 80 0.506 0.987

E6 L05 female T4 184 94 0.511 0.995

What are the levels of measurement?

| Level | Description | Question |

|---|---|---|

| Categorical | Mutually exclusive categories | What? |

| Ordinal | Ordered categorical | What order? |

| Numeric | Ordinal intervals | How much/ many? |

Unit of observation

essay_id part_id sex group tokens types ttr prop_l2

E1 L01 female T2 79 46 0.582 0.987

E2 L02 female T1 18 18 1 0.667

E7 L07 male T3 98 60 0.612 1

E3 L02 female T3 101 53 0.525 1

E4 L05 female T1 20 17 0.85 0.9

E8 L07 male T4 134 84 0.627 0.978

E5 L05 female T3 158 80 0.506 0.987

E6 L05 female T4 184 94 0.511 0.995

What is the unit of observation?

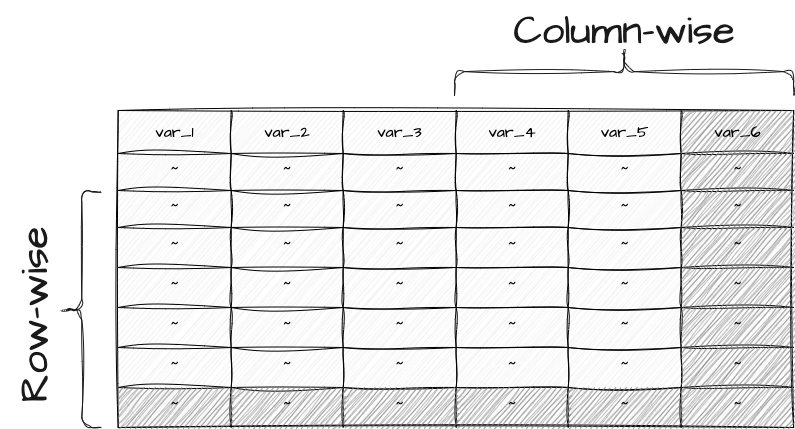

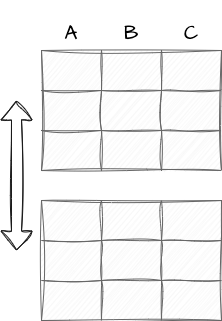

Transformation

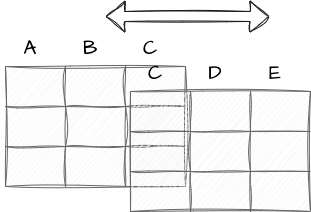

Reshaping

Types: preparation

Clean, standardize, and derive key attributes

| Type | Example |

|---|---|

| Case | Lower, UPPER, Title Case |

| Remove | Punctuation, special characters |

| Replace | abbreviations, contractions |

Any linguistic unit that can be operationalized.

# A tibble: 6 × 2

text_id word

<dbl> <chr>

1 1 tokenization enables

2 1 enables the

3 1 the quantitative

4 1 quantitative analysis

5 1 analysis of

6 1 of text Types: enrichment

Augment the dataset with additional information

- Decrease levels

- Increase levels

# A tibble: 10 × 3

word pos cat

<chr> <chr> <chr>

1 Recoding NN Noun

2 transforms VBZ Verb

3 values NNS Noun

4 to TO Preposition

5 new JJ Adjective

6 values NNS Noun

7 more RBR Adverb

8 suitable JJ Adjective

9 for IN Preposition

10 analysis NN Noun # A tibble: 11 × 6

sent_id token_id token xpos features syntactic_relation

<dbl> <chr> <chr> <chr> <chr> <chr>

1 1 1 Wow UH <NA> discourse

2 1 2 , , <NA> punct

3 1 3 this DT Number=Sing|PronType=Dem nsubj

4 1 4 is VBZ Mood=Ind|Number=Sing|Pers… cop

5 1 5 a DT Definite=Ind|PronType=Art det

6 1 6 great JJ Degree=Pos amod

7 1 7 tool NN Number=Sing root

8 1 8 for IN <NA> case

9 1 9 text NN Number=Sing compound

10 1 10 analysis NN Number=Sing nmod

11 1 11 ! . <NA> punct Concatenate

Join

Documenting the process

Data origin

| Information | Description |

|---|---|

| Resource name | Name of the corpus resource. |

| Data source | URL, DOI, etc. |

| Data sampling frame | Language, language variety, modality, genre, etc. |

| Data collection date(s) | The date or date range of the data collection. |

| Data format | Plain text, XML, HTML, etc. |

| Data schema | Relationships between data elements: files, folders, etc. |

| License | CC BY, CC BY-NC, etc. |

| Attribution | Citation information for the data source. |

Data dictionary

| Information | Description |

|---|---|

| Variable name | The name of the variable as it appears in the dataset, e.g. participant_id, modality, etc. |

| Readable variable name | A human-readable name for the variable, e.g. ‘Participant ID’, ‘Language modality’, etc. |

| Variable type | The type of information that the variable contains, e.g. ‘categorical’, ‘ordinal’, etc. |

| Variable description | A prose description expanding on the readable name and can include measurement units, allowed values, etc. |

Looking ahead

Recipe and lab

- Recipe 02: Reading, inspecting, and writing datasets

- Lab 02: Dive into datasets

Data | Quantitative Text Analysis | Wake Forest University

Footnotes

The OANC is a large collection of written and spoken American English from 1990 onwards, with freely available data and annotations.

The Santa Barbara Corpus includes transcriptions and audio recordings of natural conversations from across the US.

The Europarl Parallel Corpus is a collection of proceedings from the European Parliament translated into 21 European languages and aligned at the sentence level to build datasets for statistical machine translation research.

The Brown Corpus is the first computer-readable general corpus of edited American English texts from 1961 containing approximately 1 million words across 500 samples.